Foreign malign influence in the U.S. presidential election got off to a slower start than in 2016 and 2020 due to the less contested primary season. Russian efforts are focused on undermining U.S. support for Ukraine while China seeks to exploit societal polarization and diminish faith in U.S. democratic systems. Additionally, fears that sophisticated AI deepfake videos would succeed in voter manipulation have not yet been borne out but simpler “shallow” AI-enhanced and AI audio fake content will likely have more success. These insights and analysis are contained in the second Microsoft Threat Intelligence Election Report published today.

Russia deeply invested in undermining US support for Ukraine

Russian influence operations (IO) have picked up steam in the past two months. The Microsoft Threat Analysis Center (MTAC) has tracked at least 70 Russian actors engaged in Ukraine-focused disinformation, using traditional and social media and a mix of covert and overt campaigns.

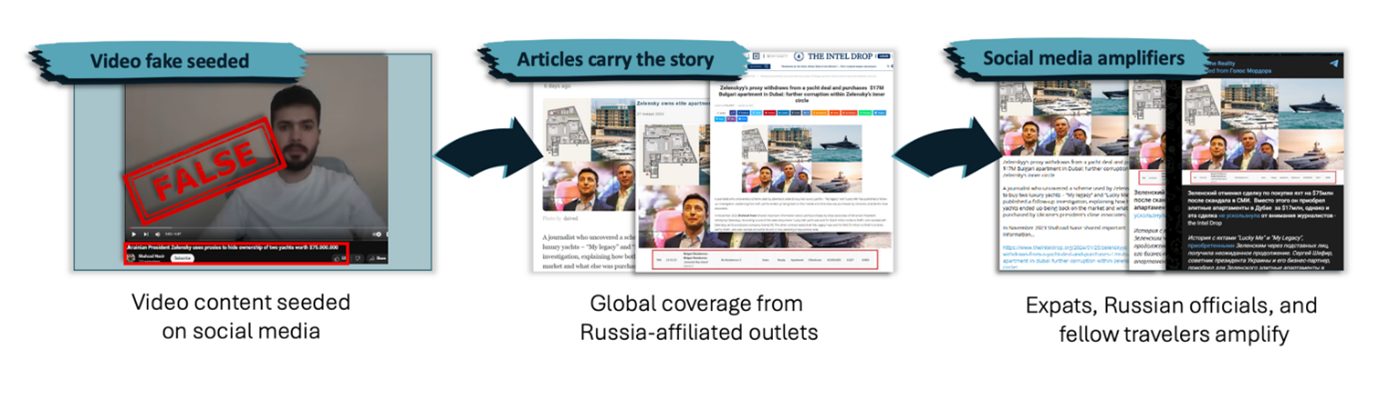

For example, the actor Microsoft tracks as Storm-1516, has successfully laundered anti-Ukraine narratives into U.S. audiences using a consistent pattern across multiple languages. Typically, this group follows a three-stage process:

- An individual presents as a whistleblower or citizen journalist, seeding a narrative on a purpose-built video channel

- The video is then covered by a seemingly unaffiliated global network of covertly managed websites

- Russian expats, officials, and fellow travellers then amplify this coverage.

Ultimately, U.S. audiences repeat and repost the disinformation likely unaware of the original source.

China seeks to widen societal divisions and undermine democratic systems

China is using a multi-tiered approach in its election-focused activity. It capitalizes on existing socio-political divides and aligns its attacks with partisan interests to encourage organic circulation.

China’s increasing use of AI in election-related influence campaigns is where it diverges from Russia. While Russia’s use of AI continues to evolve in impact, People’s Republic of China (PRC) and Chinese Communist Party (CCP)-linked actors leverage generative AI technologies to effectively create and enhance images, memes, and videos.

Limited activity so far from Iran, a frequent late-game spoiler

Iran’s past behavior suggests it will likely launch acute cyber-enabled influence operations closer to U.S. Election Day. Tehran’s election interference strategy adopts a distinct approach: combining cyber and influence operations for greater impact. The ongoing conflict in the Middle East may mean Iran evolves its planned goals and efforts directed at the U.S.

Generative AI in Election 2024 – risks remain but differ from the expected

Since the democratization of generative AI in late 2022, many have feared this technology will be used to change the outcome of elections. MTAC has worked with multiple teams across Microsoft to identify, triage, and analyze malicious nation-state use of generative AI in influence operations.

In short, the use of high-production synthetic deepfake videos of world leaders and candidates has so far not caused mass deception and broad-based confusion. In fact, we have seen that audiences are more likely to gravitate towards and share simple digital forgeries, which have been used by influence actors over the past decade. For example, false news stories with real news agency logos embossed on them.

Audiences do fall for generative AI content on occasion, though the scenarios that succeed have considerable nuance. Our report today explains how the following factors contribute to generative AI risk to elections in 2024:

- AI-enhanced content is more influential than fully AI-generated content

- AI audio is more impactful than AI video

- Fake content purporting to come from a private setting such as a phone call is more effective than fake content from a public setting, such as a deepfake video of a world leader

- Disinformation messaging has more cut-through during times of crisis and breaking news

- Impersonations of lesser-known people work better than impersonations of very well-known people such as world leaders

Leading up to Election Day, MTAC will continue identifying and analyzing malicious generative AI use and will update our assessment incrementally, as we expect Russia, Iran, and China will all increase the pace of influence and interference activity as November approaches. One caveat of critical note: If there is a sophisticated deepfake launched to influence the election in November, the tool used to make the manipulation has likely not yet entered the marketplace. Video, audio, and image AI tools of increasing sophistication enter the market nearly every day. The above assessment arises from what MTAC has observed thus far, but it is difficult to know what we’ll observe from generative AI between now and November.

The above assessment arises from what MTAC has observed thus far, but as both generative AI and geopolitical goals from Russia, Iran, and China evolve between now and November, risks to the 2024 election may shift with time.