Editor’s Note: This post is a chapter written by Microsoft Vice Chair and President Brad Smith for Microsoft’s report AI in Europe: Meeting the opportunity across the EU. The ideas expressed here were covered in his keynote speech at the ‘Europe’s Digital Transformation – Embracing the AI Opportunity’ event in Brussels on June 29, 2023.

You can read the global report Governing AI: A Blueprint for the Future here.

There are enormous opportunities to harness the power of AI to contribute to European growth and values. But another dimension is equally clear. It’s not enough to focus only on the many opportunities to use AI to improve people’s lives. We need to focus with equal determination on the challenges and risks that AI can create, and we need to manage them effectively.

This is perhaps one of the most important lessons from the role of social media. Little more than a decade ago, technologists, and political commentators alike gushed about the role of social media in spreading democracy during the Arab Spring. Yet, five years after that, we learned that social media, like so many other technologies before it, would become both a weapon and a tool – in this case, aimed at democracy itself.

Today, we are 10 years older and wiser, and we need to put that wisdom to work. We need to think early on and in a clear-eyed way about the problems that could lie ahead. As technology moves forward, it’s just as important to ensure proper control over AI as it is to pursue its benefits. We are committed and determined as a company to develop and deploy AI in a safe and responsible way. We also recognize, however, that the guardrails needed for AI require a broadly shared sense of responsibility and should not be left to technology companies alone. In short, tech companies will need to step up, and governments will need to move faster.

When we at Microsoft adopted our six ethical principles for AI in 2018, we noted that one principle was the bedrock for everything else – accountability. This is the fundamental need to ensure that machines remain subject to effective oversight by people, and the people who design and operate machines remain accountable to everyone else. In short, we must always ensure that AI remains under human control. This must be a first-order priority for technology companies and governments alike.

This connects directly with another essential concept. In a democratic society, one of our foundational principles is that no person is above the law. No government is above the law. No company is above the law, and no product or technology should be above the law. This leads to a critical conclusion: People who design and operate AI systems cannot be accountable unless their decisions and actions are subject to the rule of law.

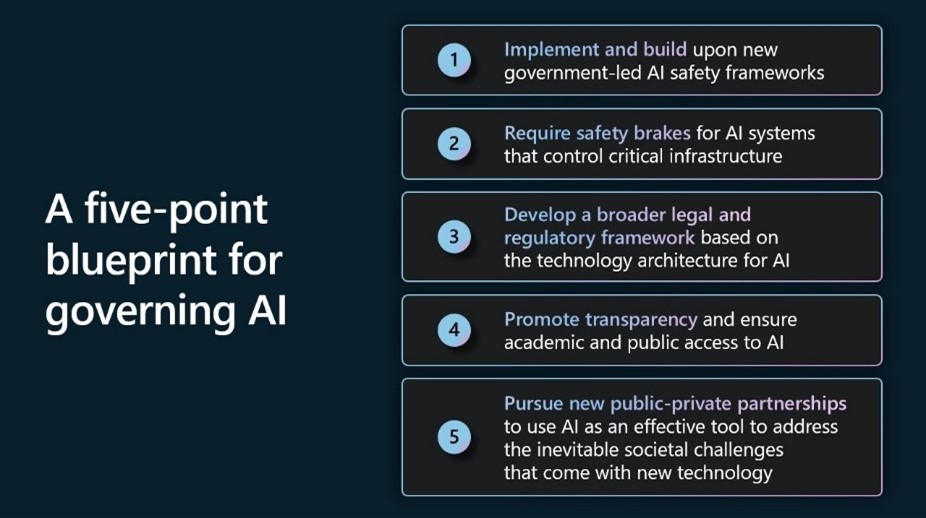

In May, Microsoft released a whitepaper, Governing AI: A Blueprint for the Future, which sought to address the question of how do we best govern AI and set out Microsoft’s five-point blueprint.

The blueprint builds on lessons learned from many years of work, investment, and input. Here, we draw some of that thinking together with a specific focus on Europe, its leadership on AI regulation, and what a viable path to advancing AI governance internationally may look like.

With the European Parliament’s recent vote on the European Union’s Artificial Intelligence (AI) Act and ongoing trilogue discussions, Europe is now at the forefront of establishing a model for guiding and regulating AI technology.

From early on, we’ve been supportive of a regulatory regime in Europe that effectively addresses safety and upholds fundamental rights while continuing to enable innovations that will ensure that Europe remains globally competitive. Our intention is to offer constructive contributions to help inform the work ahead. Collaboration with leaders and policymakers across Europe and around the world is both important and essential.

In this spirit, here we want to expand upon our five-point blueprint, highlight how it aligns with EU AI Act discussions, and provide some thoughts on the opportunities to build on this regulatory foundation.

First, implement and build upon new government-led AI safety frameworks

One of the most effective ways to accelerate government action is to build on existing or emerging governmental frameworks to advance AI safety.

A key element to ensuring the safer use of this technology is a risk-based approach, with defined processes around risk identification and mitigation as well as testing systems before deployment. The AI Act sets out such a framework and this will be an important benchmark for the future. In other parts of the world, other institutions have advanced similar work, such as the AI Risk Management Framework developed by the U.S. National Institute of Standards and Technology, or NIST, and the new international standard ISO/IEC 42001 on AI Management Systems, which is expected to be published in the fall of 2023.

Microsoft has committed to implementing the NIST AI risk management framework and we will implement future relevant international standards, including those which will emerge following the AI Act. Opportunities to align such frameworks internationally should continue to be an important part of the ongoing EU-US dialogue.

As the EU finalizes the AI Act, the EU could consider using procurement rules to promote the use of relevant trustworthy AI frameworks. For instance, when procuring high-risk AI systems, EU procurement authorities could require suppliers to certify via third-party audits that they comply with relevant international standards.

We recognize that the pace of AI advances raises new questions and issues related to safety and security, and we are committed to working with others to develop actionable standards to help evaluate and address those important questions. This includes new and additional standards relating to highly capable foundation models.

Second, require effective safety brakes for AI systems that control critical infrastructure

Increasingly, the public is debating questions around the control of AI as it becomes more powerful. Similarly, concerns exist regarding AI control of critical infrastructure like the electrical grid, water system, and city traffic flows. Now is the time to discuss these issues – a debate already underway in Europe, where developers of high-risk AI systems will become responsible for warranting that the necessary safety measures are in place.

Our blueprint proposes new safety requirements that, in effect, would create safety brakes for AI systems that control the operation of designated critical infrastructure. These fail-safe systems would be part of a comprehensive approach to system safety that would keep effective human oversight, resilience, and robustness top of mind. They would be akin to the braking systems engineers have long built into other technologies such as elevators, school buses, and high-speed trains, to safely manage not just everyday scenarios, but emergencies as well.

In this approach, the government would define the class of high-risk AI systems that control critical infrastructure and warrant such safety measures as part of a comprehensive approach to system management. New laws would require operators of these systems to build safety brakes into high-risk AI systems by design. The government would then oblige operators to test high-risk systems regularly. And these systems would be deployed only in licensed AI datacenters that would provide a second layer of protection and ensure security.

Third, develop a broad legal and regulatory framework based on the technology architecture for AI

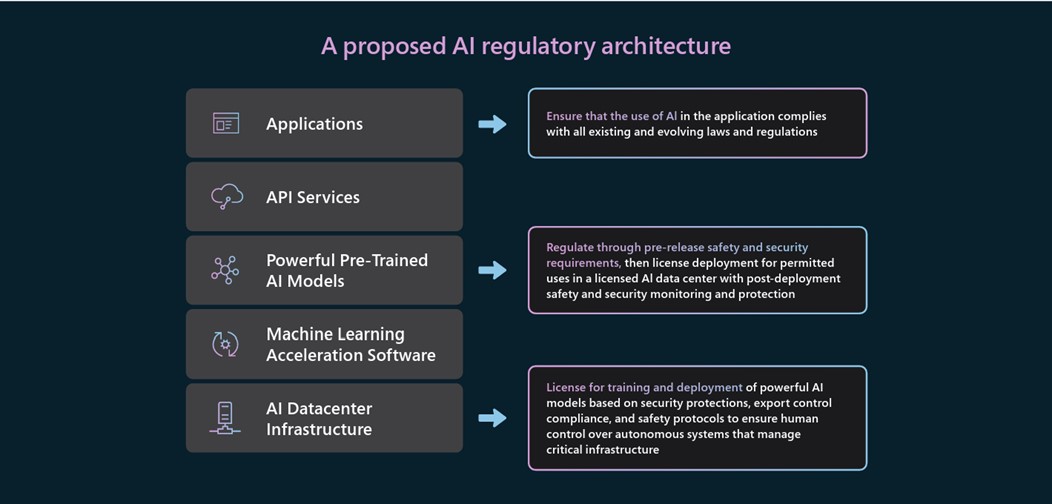

As we’ve worked the past year with AI models at the frontier of this new technology, we’ve concluded that it’s critical to develop a legal and regulatory architecture for AI that reflects the technology architecture for AI itself.

Regulatory responsibilities need to be placed upon different actors based on their role in managing different aspects of AI technology. Those closest to relevant decisions on design, deployment, and use are best placed to comply with corresponding responsibilities and mitigate the respective risks, as they understand best the specific context and use-case. This sounds straightforward, but as discussions in the EU have demonstrated, it’s not always easy.

The AI Act acknowledges the challenges to regulating complex architecture through its risk-based approach for establishing requirements for high-risk systems. At the application layer, this means applying and enforcing existing regulations while being responsible for any new AI-specific deployment or use considerations.

It’s also important to make sure that obligations are attached to powerful AI models, with a focus on a defined class of highly capable foundation models and calibrated to model-level risk. This will impact two layers of the technology stack. The first will require new regulations for these models themselves. And the second will involve obligations for the AI infrastructure operators on which these models are developed and deployed. The blueprint we developed offers suggested goals and approaches for each of these layers.

The different roles and responsibilities require joint support. We are committed to helping our customers apply “Know Your Customer” (KYC) principles through our recently announced AI Assurance Program. Financial institutions use this framework to verify customer identities, establish risk profiles and monitor transactions to help detect suspicious activity. As Antony Cook details, we believe this approach can apply to AI in what we are calling “KY3C”: know one’s cloud, one’s customers, and one’s content. The AI Act does not expressly include KYC requirements, yet we believe such an approach will be key to meeting both the spirit and obligations of the act.

The AI Act requires developers of high-risk systems to put in place a risk management system to ensure that systems are tested, to mitigate risks to the extent possible, including through responsible design and development, and to engage in post-market monitoring. We fully support this. The fact that the AI Act hasn’t yet been finalized shouldn’t prevent us from applying some of these practices today. Even before the AI Act is implemented, we will test all our AI systems prior to release, and use red teaming to do so for high-risk systems.

Fourth, promote transparency and ensure academic and nonprofit access to AI

It’s also critical to advance the transparency of AI systems and broaden access to AI resources. While there are some inherent tensions between transparency and the need for security, there exist many opportunities to make AI systems more transparent. That’s why Microsoft has committed to an annual AI transparency report and other steps to expand transparency for our AI services.

The AI Act will require that AI providers make it clear to users that they are interacting with an AI system. Similarly, whenever an AI system is used to create artificially generated content, this should be easy to identify. We fully support this. Deceptive, AI-generated content or “deepfakes” – especially audio-visual content impersonating political figures – are of particular concern in terms of their potential harm to society and the democratic process.

In tackling this issue, we can start with building blocks that exist already. One of these is the Coalition for Content Provenance Authenticity, or C2PA, a global standards body with more than 60 members including Adobe, the BBC, Intel, Microsoft, Publicis Groupe, Sony, and Truepic. The group is dedicated to bolstering trust and transparency of online information including releasing the world’s first technical specification for certifying digital content in 2022, which now includes support for Generative AI. As Microsoft’s Chief Scientific Officer, Eric Horvitz, said last year, ”I believe that content provenance will have an important role to play in fostering transparency and fortifying trust in what we see and hear online.”

There will be opportunities in the coming months to take important steps together on both sides of the Atlantic and globally to advance these objectives. Microsoft will soon deploy new state-of-the-art provenance tools to help the public identify AI-generated audio-visual content and understand its origin. At Build 2023, our annual developer conference, we announced the development of a new media provenance service. The service, implementing the C2PA specification, will mark and sign AI-generated videos and images with metadata about their origin, enabling users to verify that a piece of content is AI-generated. Microsoft will initially support major image and video formats and release the service for use with two of Microsoft’s new AI products, Microsoft Designer and Bing Image Creator. Transparency requirements in the AI Act, and potentially several of the to-be-developed standards related to the Act, present an opportunity to leverage such industry initiatives towards a shared goal.

We also believe it is critical to expand access to AI resources for academic research and the nonprofit community. Unless academic researchers can obtain access to substantially more computing resources, there is a real risk that scientific and technological inquiry will suffer, including that relating to AI itself. Our blueprint calls for new steps, including those we will take across Microsoft, to address these priorities.

Fifth, pursue new public-private partnerships to use AI as an effective tool to address the inevitable societal challenges that come with new technology

One lesson from recent years is that democratic societies often can accomplish the most when they harness the power of technology and bring the public and private sectors together. It’s a lesson we need to build upon to address the impact of AI on society.

AI is an extraordinary tool. But, like other technologies, it too can become a powerful weapon, and there will be some around the world who will seek to use it that way. We need to work together to develop defensive AI technologies that will create a shield that can withstand and defeat the actions of any bad actor on the planet.

Important work is needed now to use AI to protect democracy and fundamental rights, provide broad access to the AI skills that will promote inclusive growth, and use the power of AI to advance the planet’s sustainability needs. Perhaps more than anything, a wave of new AI technology provides an occasion for thinking big and acting boldly. In each area, the key to success will be to develop concrete initiatives and bring governments, companies, and NGOs together to advance them. Microsoft will do its part in each of these areas.

International partnership to advance AI governance

Europe’s early start towards AI regulation offers an opportunity to establish an effective legal framework, grounded in the rule of law. But beyond legislative frameworks at the level of nation states, multilateral public-private partnership is needed to ensure AI governance can have an impact today, not just a few years from now, and at the international level.

This is important to serve as an interim solution before regulations such as the AI Act come into effect, but, perhaps more importantly, it will help us work towards a common set of shared principles that can guide both nation states and companies alike.

In parallel to the EU’s focus on the AI Act, there is an opportunity for the European Union, the United States, the other members of the G7 as well as India and Indonesia, to move forward together on a set of shared values and principles. If we can work with others on a voluntary basis, then we’ll all move faster and with greater care and focus. That’s not just good news for the world of technology, it is good news for the world as a whole.

Working towards a globally coherent approach is important, recognizing that AI – like many technologies – is and will be developed and used across borders. And it will enable everyone, with the proper controls in place, to access the best tools and solutions for their needs.

We are very encouraged by recent international steps, including by the EU-US Trade & Tech Council (TTC) at the end of May, announcing a new initiative to develop a voluntary AI Code of Conduct. This can bring together private and public partners to implement non-binding international standards on AI transparency, risk management, and other technical requirements for firms developing AI systems. Similarly, at the annual G7 Summit in Hiroshima in May 2023, leaders committed to “advance international discussions on inclusive artificial intelligence (AI) governance and interoperability to achieve our common vision and goal of trustworthy AI, in line with our shared democratic values”. Microsoft fully supports and endorses international efforts to develop such a voluntary code. Technology development and the public interest will benefit from the creation of principle-level guardrails, even if initially they are non-binding.

To make the many different aspects of AI governance work on an international level, we will need a multilateral framework that connects various national rules and ensures that an AI system certified as safe in one jurisdiction can also qualify as safe in another. There are many effective precedents for this, such as common safety standards set by the International Civil Aviation Organization, which means an airplane does not need to be refitted mid-flight from Brussels to New York.

In our view, an international code should:

- Build on the work already done at the OECD to develop principles for trustworthy AI

- Provide a means for regulated AI developers to attest to the safety of these systems against internationally agreed standards

- Promote innovation and access by providing a means for mutual recognition of compliance and safety across borders

Before the AI Act and other formal regulations come into force, it’s important that we take steps today to implement safety brakes for AI systems that control critical infrastructure. The concept of safety brakes, along with licensing for highly capable foundation models and AI infrastructure obligations, should be key elements of the voluntary, internationally coordinated G7 code that signatory nation states agree to incorporate into their national systems. High-risk AI systems, relating to critical infrastructure (e.g., transport, electrical grids, water systems) or systems that can lead to serious fundamental rights violations or other significant harms, could require additional international regulatory agencies, based on the model of the International Civil Aviation Organization, for example.

The AI Act creates a provision for an EU database for high-risk systems. We believe it’s an important approach, and it should be a consideration for the international code. Developing a coherent, joint, global approach makes immeasurable sense for all those involved in developing, using, and regulating AI.

Lastly, we must ensure academic researchers have access to study AI systems in depth. There are important open research questions around AI systems, including how one evaluates them properly across responsible AI dimensions, how one best makes them explainable, and how they best align with human values. The work the OECD is doing on the evaluation of AI systems is seeing good progress. But there’s an opportunity to go further and faster by fostering international research collaboration and boosting the efforts of the academic communities by feeding into that process. The EU is well placed to lead on this, partnering with the U.S.

AI governance is a journey, not a destination. No one has all the answers, and it’s important we listen, learn, and collaborate. Strong, healthy dialogue between the tech industry, governments, businesses, academia, and civil society organizations is vital to make sure governance keeps pace with the speed at which AI is developing. Together, we can help realize AI’s potential to be a positive force for good.

This post was edited on June 29, 2023 at 5:03pm CET to include the recording of Brad Smith’s speech.