Creating a virtual stage when in-person isn’t possible

A virtual green screen, deep neural networks, and an Azure Kinect sensor (or two)

One of my favorite video games is Age of Empires. I used to play it with coworkers almost two decades ago, and I still play it with my son who beats me with no mercy. One of the magical moments of the game is when you advance the age of your civilization, from feudal to castle to imperial age. Each age brings new technologies that open entirely new ways of driving your strategy to defend from your opponent and win the game.

Sometimes I feel we are in one of those age transitions in real life too. As in Age of Empires, it comes with new technologies that we can use to respond to external disruption and reimagining our strategy. With Covid-19, we find ourselves in our own moment of disruption that has pushed us to rethink how we do things. Events are one of those things.

If you’ve watched this year’s Microsoft Build you’ve probably seen one of the innovation sessions on a “stage.” Spoiler alert – it’s fake. The presenters were all at home safe and sound. Using a background matting process from the University of Washington and our Azure Kinect sensors, we were able to produce presentations that look like we were live. But let me step back for a minute and share how we got here.

Covid-19 has made it impossible for large scale in-person events, so we are all looking for new ways to communicate with our customers. And again, if you participated in any part of Microsoft Build, then you experienced first-hand how our Microsoft Global Event’s team had to shift everything to virtual.

As one of many content owners for Build, we also had to get creative. We took an experimental approach to how we presented our content by using our technology and, by experimenting on the innovation of others, we created a unique way to deliver our sessions on an “virtual stage” at the conference.

The idea came from two published papers from Adobe Research in 2017 and the University of Washington in 2020 on background matting for video. In basic terms, the approach allows anyone to take a video of themselves and uses AI models to predict the matting around the subject. Essentially the process replaces the background without the need for a green screen.

So, we built on top of the background matting process by using our Azure Kinect sensors with an AI model based on the work from University of Washington to create a new way for our presenters to easily record themselves from their home and appear on our virtual stage.

The Azure Kinect camera captures depth information with an infrared light and that data helps make the AI model more accurate. We used an app called Speaker Recorder to manage two video signals from the Azure Kinect camera, the RGB signal and the depth signal. Once the recording was complete, the AI model was applied through a command line tool. To get the full details on how this all came together, check out the Microsoft AI Lab.

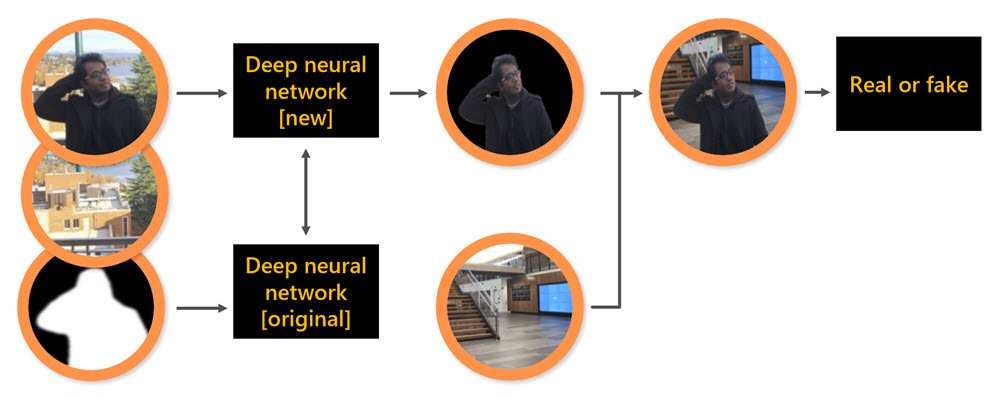

The AI model we used is based on the work recently published by the University of Washington. In their research, the university developed a deep neural network that takes two images, one with a background and another one with a person in it. The output of the neural network is a smooth transparency mask.

This neural network was trained with images where the masking work was done manually. The UW researchers used a dataset provided by Adobe with many images where a designer manually created the transparency mask.

With this approach, the neural network can learn how to smooth areas like hair or lose clothing. However, there are some limitations. If the person is wearing something with a similar color to the background, the system renders it as holes in the image which defeats the illusion.

So, what the UW researchers did is to combine this method with another. A second neural network tries to guess the contour just by looking at the image. In the case of our virtual stage we know that we have a person on screen, so the neural network tries to identify the silhouette of that person. Adding this second neural network eliminates the color transparency issue but the small details like hair or the fingers can be an issue.

So, here’s the interesting part. The UW researchers created an architecture called Context Switching. Depending on the conditions, the system can pick the best solution, getting the best of the two.

In our case, because we are using Azure Kinect, we can go a step farther and replace the second neural network with the silhouette provided by the Kinect, which is much more accurate since it’s coming from the depth information captured.

The model is improved even more with another AI technique called adversarial network. We connect the output of our neural network with another neural network that identifies if an image is fake or real. This makes small variations to the original neural network to fool it. The result is a neural network that can create even more natural images.

AI models, Context Switching, and a neural network to detect fakes creates a more natural stage presence

Source: Background Matting: The World is Your Green Screen

Source: Background Matting: The World is Your Green Screen

Authors: Soumyadip Sengupta, Vivek Jayaram, Brian Curless, Steve Seitz, Ira Kemelmacher-Shlizerman

The result? Our virtual stage which you see in the Innovation Spaces at Build. The virtual stage uses are infinite, and the process gives us flexibility to apply it to longer-form sessions like we’re doing for a keynote at the Microsoft AI Virtual Summit. If you want to see how we are using the stage for the Virtual Summit, check out the live stream on Microsoft LinkedIn June 23 at 9AM PT.

This entire training process and code is available in GitHub for you to use and add to it. And who knows, maybe virtual events on a virtual stage, with content delivered from the comfort of our home, is our way of advancing to the next Age.

I think I hear my son calling for a rematch.

To learn more about how we created our virtual stage, visit the Microsoft AI Lab.