When most people with normal vision walk down the street, they can easily differentiate the things they must avoid – like trees, curbs and glass doors — from the things they don’t, such as shadows, reflections and clouds.

Chances are, most people also can anticipate what obstacles they should expect to encounter next — knowing, for example, that at a street corner they should watch out for cars and prepare to step down off the curb.

The ability to differentiate and anticipate comes easily to humans but it’s still very difficult for artificial intelligence-based systems. That’s one big reason why self-driving cars or autonomous delivery drones are still emerging technologies.

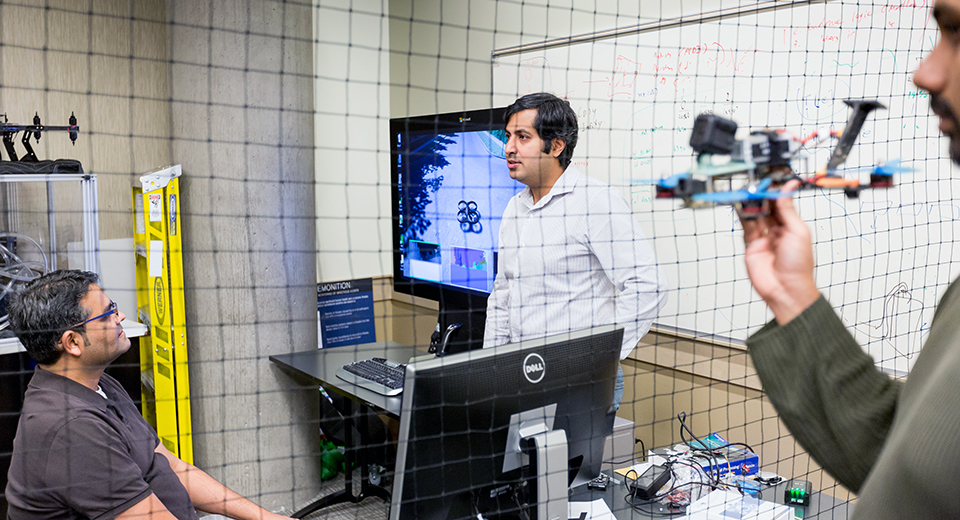

Microsoft researchers are aiming to change that. They are working on a new set of tools that other researchers and developers can use to train and test robots, drones and other gadgets for operating autonomously and safely in the real world. A beta version is available on GitHub via an open source license.

It’s all part of a research project the team dubs Aerial Informatics and Robotics Platform. It includes software that allows researchers to quickly write code to control aerial robots and other gadgets and a highly realistic simulator to collect data for training an AI system and testing it in the virtual world before deploying it in the real world.

Ashish Kapoor, a Microsoft researcher who is leading the project, said they hope the tools will spawn major progress in creating artificial intelligence gadgets we can trust to drive our cars, deliver our packages and maybe even do our laundry.

“The aspirational goal is really to build systems that can operate in the real world,” he said.

That’s different from many other artificial intelligence research projects, which have focused on teaching AI systems to be successful in more artificial environments that have well-defined rules, such as playing board games.

Kapoor said this work aims to help researchers develop more practical tools that can safely augment what people are doing in their everyday lives.

“That’s the next leap in AI, really thinking about real-world systems,” Kapoor said.

Simulating the real world

Let’s say you want to teach an aerial robot to tell the difference between a wall and a shadow. Chances are, you’d like to test your theories without crashing hundreds of drones into walls.

Until recently, simulators provided some help for this kind of testing, but they weren’t accurate enough to truly reflect the complexities of the real world. That’s key to developing systems that can accurately perceive the world around them in the same way that people do.

Now, thanks to big advances in graphics hardware, computing power and algorithms, Microsoft researchers say they can create simulators that offer a much more realistic view of the environment. Aerial Informatics and Robotics Platform’s simulator is built on the latest photorealistic technologies, which can accurately render subtle things, like shadows and reflections, that make a significant difference in computer vision algorithms.

“If you really want to do this high-fidelity perception work, you have to render the scene in very realistic detail – you have sun shining in your eyes, water on the street,” said Shital Shah, a principal research software development engineer who has been a key developer of the simulator.

Because the new simulator is so realistic – but not actually real – researchers can, in turn, use it as a safe, reliable and cheap testing ground for autonomous systems.

That has two advantages: First, it means they can “crash” a costly drone, robot or other gadget an infinite number of times without burning through tens of thousands of dollars in equipment, damaging actual buildings or hurting someone.

Second, it allows researchers to do better AI research faster. That includes gathering training data, which is used to build algorithms that can teach systems to react safely, and conducting the kind of AI research that requires lots of trial and error, such as reinforcement learning.

The researchers say the simulator should help them get to the point more quickly where they can test, or even use, their systems in real-world settings in which there is very little room for error.

Enabling development of intelligent robotic systems

In addition to the simulator, the Aerial Informatics and Robotics Platform includes a library of software that allows developers to quickly write code to control drones built on two of the most popular platforms: DJI and MavLink. Normally, developers would have to spend time learning these separate APIs and write separate code for each platform.

The researchers expect to add more tools to the platform down the road, and in the meantime they hope that the library and simulator will help push the entire field forward.

For example, the tools could help researchers develop better perception abilities, which would help the robot learn to recognize elements of its environment and do things like differentiate between a real obstacle, like a door, and a false one, like a shadow. These perception abilities also would help the robot understand complex concepts like how far away a pedestrian is.

Similarly, the Aerial Informatics and Robotics Platform could help developers make advances in planning capabilities, which aim to help the gadgets anticipate what will happen next and how they should respond, much like humans know to anticipate that cars will drive by when we cross a street. That kind of artificial intelligence – which would closely mimic how people navigate the real world – is key to building practical systems for safe everyday use.

The entire platform is designed to work on any type of autonomous system that needs to navigate its environment.

“I can actually use the same code base to fly a glider or drive a car,” Kapoor said.

Democratization of robotics

The researchers have been working on the platform for less than a year, but they are drawing on decades of experience in fields including computer vision, robotics, machine learning and planning. Kapoor said they made such quick progress in part because of the unique structure of Microsoft’s research labs, in which it’s easy for researchers with vastly different backgrounds to collaborate.

The researchers decided to make the project open source to further the entire body of research into building artificial intelligence agents that can operate autonomously. Although many people see a future in which drones, robots and cars operate on their own, for now most of these systems are depending on a considerable amount of human direction.

The researchers also note that many robotics and artificial intelligence researchers don’t have the time or resources to develop these tools on their own, or do this kind of testing in the real world. That’s another reason for sharing their work.

“We want a democratization of robotics,” said Debadeepta Dey, a researcher working on the project.

They also are hoping the Aerial Informatics and Robotics Platform will help jumpstart efforts to standardize protocols and regulations for how artificial intelligence agents should operate in the real world.

Kapoor notes that everyone who drives a car knows to follow a standard set of protocols about things like which side of the road to drive on, when to stop for pedestrians and how fast to go. Those sorts of standards don’t exist – yet – for artificial intelligence agents.

With a system like this, he said, researchers could develop some best practices that they can apply across the board to improve safety as autonomous systems become more mainstream.

“The whole ecosystem needs to evolve,” Kapoor said.

Related:

- Learn more about the Aerial Informatics and Robotics Platform

- Check out the platform on GitHub

- Read the research paper

- How a Red Bull Air Race pilot is helping solve future robot navigation challenges

- Project Malmo, which lets researchers use Minecraft for AI research, makes public debut

- 17 for ’17: Microsoft researchers on what to expect in 2017 and 2027

Allison Linn is a senior writer at Microsoft. Follow her on Twitter.