In the last few months we’ve seen some pretty great applications of natural user interface (NUI). It seems fair to say that NUI is becoming more widely used and accepted in its various forms. But not being ones to sit back and relax, the folks at Microsoft Research (MSR) have been toying with a few ideas they have up their sleeves.

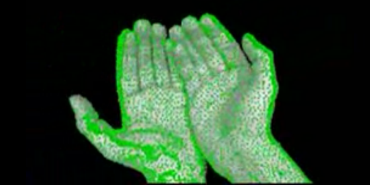

My favorite is Holodesk, a research project out of the Sensors and Devices group at Microsoft Research Cambridge. I won’t attempt to describe what it does in great detail, except to say that with Holodesk you can manipulate 3-D, virtual images with your hands. Whilst this is only a research project at this stage, I can envisage future applications in areas such as board gaming, rapid prototype design or perhaps even telepresence, where users would share a single 3D scene viewed from different perspectives. I know it sounds very Star Trek but this is not science fiction.

For the record, the Holodesk isn’t the only 3-D interaction experiment out there. But what sets it apart from the rest is the use of beam-splitters and a graphic processing algorithm, which work together to provide a more life-like experience. The video provides a much better explanation, so I’ll leave the explanation at that.

As if that weren’t enough, MSR this week published several research papers at the User Interface Software and Technology Conference. All of the papers relate to some aspect of NUI. For example there’s a project called OmniTouch that uses a pico projector and 3-D camera to turn virtually any surface into a multi-touch interface. Eventually, Omni Touch could be designed to be no larger than a pendant or a watch.

Another example is PocketTouch, which is focused on creating multi-touch sensors that will allow you to interact with a smart phone or other device through the fabric of your shirt pocket or jeans. Conceivably, you could pause a song or listen to voice mail without fishing out your phone. Both of these projects are part of a larger effort by MSR to look at more unconventional uses of touch interface, so we don’t sit back and settle for the status quo.

Both projects have seen plenty of coverage in the last day and you can read more about them, and see videos, on the Microsoft Research site.