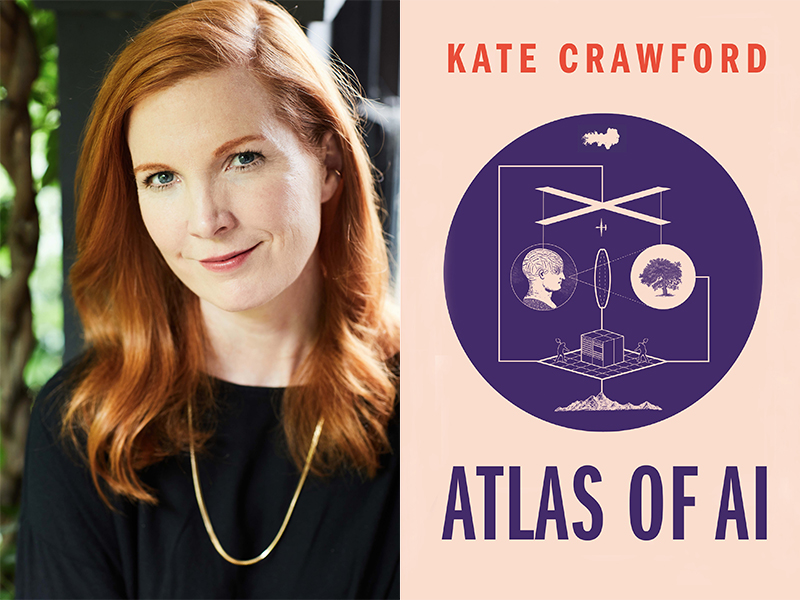

Kate Crawford is a Senior Principal Researcher at Microsoft Research (MSR) New York and a Research Professor at USC Annenberg. She’s a cofounder of the FATE group at MSR, and the author of Atlas of AI (Yale University Press, 2021). We were lucky enough to have Kate answer some questions about her new book, how AI is affecting our world, and how we study and regulate AI.

What inspired you to write “Atlas of AI”?

My aim for this book is to map how AI is really “made,” and to understand the wider planetary costs. AI is often represented as a set of technical approaches, as abstract and immaterial. But in reality, it’s a material set of systems that rely on vast amounts of energy, low-paid human labor, and large-scale data extraction.

A major inspiration for this book came from having the privilege of collaborating with Prof. Vladan Joler, who is both a researcher and an artist. Several years ago, we published a project called Anatomy of an AI System, which maps the entire lifecycle of an Amazon Echo. We began by thinking about the data processes, and then expanded to research the components within the device itself: Where are they mined? Where are they smelted? What are the extractive processes along the supply chain? Where are the e-waste tips located when they are thrown away? It made us contend with the much wider political economy of technology.

Writing Atlas of AI took five years, and I went on many journeys to places where I could see the manufacturing process of AI for myself: from a lithium mine to an Amazon fulfillment center, from historical archives to giant data centers.

How is AI technology impacting the world — for better or worse?

AI technologies have much potential, be it for tracking climate change to translating languages. But as many researchers have shown, they can also come at a real cost. How we assess those costs is of critical importance, because the benefits and harms aren’t evenly distributed.

We can’t presume that that every AI technology is necessary or worthwhile, or that the downsides will affect all communities the same way. For example, a number of scholars, including Emma Strubell, Timnit Gebru, Meg Mitchell, and Sasha Luccioni, among many others, have documented the environmental impact of machine learning and large language models, and also pointed to how the worst impacts are felt by communities of color and those living in poverty. We rarely think of how training a new AI model has enormous environmental consequences, but with the current climate emergency, we have a responsibility to understand the full social and ecological implications. Likewise with labor — there are so many kinds of hidden labor all along the supply chain, from miners to crowdworkers (and for more on the experiences of crowdworkers, I recommend Ghost Work by Microsoft colleagues Mary Gray and Sid Suri). These are serious challenges we face that we cannot ignore, especially if we want the potential benefits of AI to be distributed justly and fairly.

Where do you see the advancement of AI technology going in the future?

There’s a tendency to focus on technological advances, but there are also exciting advancements happening in how we study and regulate AI. For example, we’ve seen tremendous growth in work across the wider social, political, and ecological implications of AI, as well as work on fairness, accountability, transparency and ethics (what we call FATE at MSR). In the EU, there’s been some substantive progress toward creating an omnibus regulatory framework for AI, the so-called AI Act. There’s a pressing need in the US for governance and regulation to catch up to help ensure that our rights are protected when technologies are deployed. In this context, it’s interesting to see that Eric Lander and Alondra Nelson at the Office of Science and Technology Policy in the White House are calling for a bill of rights for AI.

What is the biggest takeaway you want your readers to have after reading your book?

I hope that after reading my book, people have new ways of understanding AI. AI can seem like a spectral force — as disembodied computation — but these systems are anything but abstract. They are physical infrastructures that are reshaping the Earth, while simultaneously shifting how we see the world and each other. It’s important to understand how AI is contributing to widening asymmetries of power. But no arrangement of power is inevitable — which means that things can be different if we have a commitment to a more just and sustainable world.

How long have you been with Microsoft Research and What’s your favorite part of your job?

I’ve been at MSR for almost ten years, and I can easily say my favorite part of the job is the people I get to work with. I’m blown away by the brilliance and generosity of my colleagues. I’ve been lucky enough to work with both the Social Media Collective in the MSR New England lab and the FATE group at MSR New York, and these are such extraordinarily warm, creative, and thoughtful communities of scholars and activists. I’m grateful to learn from them every day.

To learn more about Kate’s new book, Atlas of AI, and to find where you can order it at your local bookstore, head to katecrawford.net.