New Z-code Mixture of Experts models improve quality, efficiency in Translator and Azure AI

Microsoft is making upgrades to Translator and other Azure AI services powered by a new family of artificial intelligence models its researchers have developed called Z-code, which offer the kind of performance and quality benefits that other large-scale language models have but can be run much more efficiently.

“Our goal is to help everyone and every organization on the planet to communicate better, and to achieve that goal there are really two important dimensions — we want the quality of translations to be as good as possible and we want to support as many languages as possible,” said Xuedong Huang, Microsoft technical fellow and Azure AI chief technology officer.

Z-code takes advantage of shared linguistic elements across multiple languages via transfer learning —which applies knowledge from one task to another related task — to improve quality for machine translation and other language understanding tasks. It also helps extend those capabilities beyond the most common languages across the globe to underrepresented languages that have less available training data.

“With Z-code we are really making amazing progress because we are leveraging both transfer learning and multitask learning from monolingual and multilingual data to create a state-of-the-art language model that we believe has the best combination of quality, performance and efficiency that we can provide to our customers,” Huang said.

These models use a sparse “Mixture of Experts” approach that is more efficient to run because it only needs to engage a portion of the model to complete a task, as opposed to other architectures that have to activate an entire AI model to run every request. This architecture allows massive scale in the number of model parameters while keeping the amount of compute constant.

To put these models in production, Microsoft is using NVIDIA GPUs and Triton Inference Server to deploy and scale them efficiently for high-performance inference.

Microsoft has recently deployed Z-code models to improve common language understanding tasks such as name entity recognition, text summarization, custom text classification and key phrase extraction across its Azure AI services. But this is the first time a company has publicly demonstrated that it can use this new class of Mixture of Experts models to power machine translation products.

The new Z-code-based translation model is now available, by invitation initially, to customers using document translation in Translator, a Microsoft Azure Cognitive Service which is a part of Azure AI.

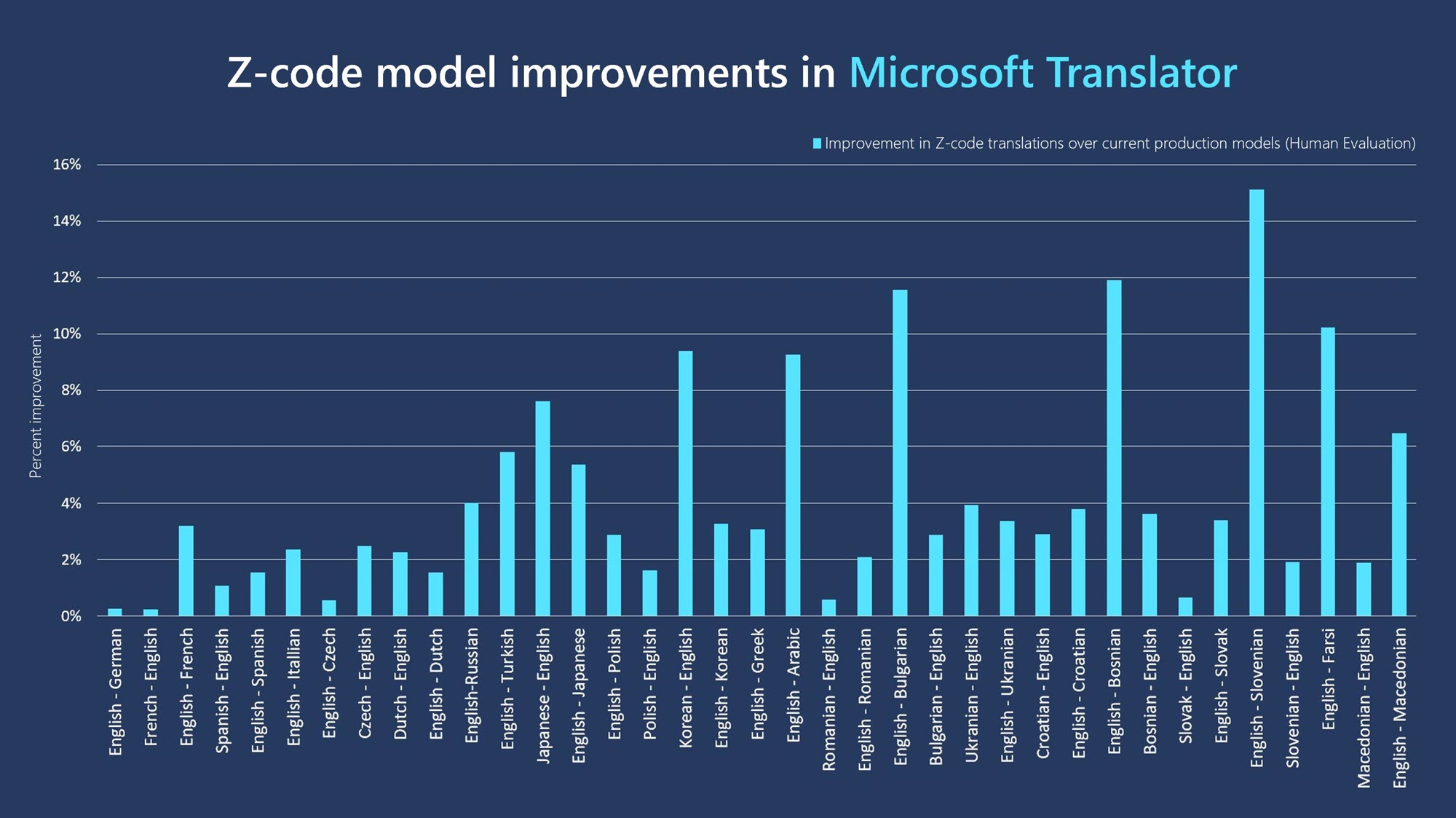

Microsoft’s Z-code models consistently improved translation quality over current production models, according to common industry metrics. In contrast with typical multilingual transfer learning approaches, which typically show AI quality gains in languages that have fewer direct translation examples available for training, the Z-code Mixture of Experts models show consistent gains even in the largest languages.

New Z-code Mixture of Experts AI models are powering improvements and efficiencies in Translator and other Azure AI services.

Human evaluators in a blind test commissioned by Microsoft found that the Z-code Mixture of Experts models improved translations across languages, with an average gain of 4%. For instance, the models improved English to French translations by 3.2 %, English to Turkish by 5.8 %, Japanese to English by 7.6%, English to Arabic by 9.3% and English to Slovenian by 15%.

Creating more powerful and integrative AI systems

Z-code is part of Microsoft’s larger XYZ-code initiative that seeks to combine models for text, vision, audio and multiple languages to create more powerful and integrative AI systems that can speak, hear, see and understand people better.

Over the past five years, Microsoft has developed models that have matched human performance in conversational speech recognition, machine translation, image captioning, SuperGLUE natural language understanding and commonsense question answering. These breakthroughs provide the foundation to realize more ambitious AI systems that can achieve multisensory and multilingual learning that is closer to how people learn and understand, Huang said.

“Those are the pieces, the building blocks that we are using to build a truly differentiated intelligence…and to form production systems that are cost efficient,” Huang said.

Z-code models were developed as part of Microsoft’s AI at Scale and Turing initiatives, which seek to develop large models that are pretrained on vast amounts of textual data to understand nuances of language — which can be integrated in multiple Microsoft products and also made available to customers for their own uses.

The same underlying model can be fine-tuned to perform different language understanding tasks such as translating between languages, summarizing a speech, offering ways to complete a sentence or generating suggested tweets, instead of having to develop separate models for each of those narrow purposes.

Our goal is to help everyone and every organization on the planet to communicate better, and to achieve that goal there are really two important dimensions — we want the quality of translations to be as good as possible and we want to support as many languages as possible.

Many of these language models, however, are so large that it can be challenging to integrate them into real-world products. But Z-code Mixture of Experts models are what’s known as “sparse,” which means that they only activate a fraction of the model’s parameters to perform an individual task, as opposed to engaging the whole model every time.

That makes them much more cost-efficient to run, in the same way that it’s cheaper and more efficient to only heat your house in winter during the times of day that you need it and in the spaces that you regularly use, rather than keeping a furnace running full blast all the time.

Microsoft researchers collaborated closely with NVIDIA to deploy Z-code Mixture of Experts models in production for the first time, and on NVIDIA GPUs. Using the NVIDIA Triton Inference Server, they were able to deploy these models using a more efficient runtime that leveraged CUTLASS and FasterTransformer to optimize these new types of models. The new runtime was able to achieve up to a 27x speedup over non-optimized GPU runtimes.

The Z-code team also worked closely with Microsoft DeepSpeed researchers to learn how to efficiently train massive Mixture of Experts models such as Z-code, as well as more modestly sized Z-code models for production scenarios.

“We have been able to build this one model that can cover a lot of languages and serve various tasks from summarization to text generation to translation and be useful for many other Microsoft teams,” said Hany Hassan Awadalla, Microsoft principal researcher and research manager who helps lead development of Z-code models for Translator and other Azure Cognitive Services.

“So we are working to spread this across the company and reduce their deployment costs, which is the vision of AI at Scale generally,” he said.

Integrating research into customer products

The Z-code models are initially being used to boost Translator’s document translation features, which allow customers to translate entire Word documents, PDFs, PowerPoint presentations or other documents into new languages with all the formatting preserved.

| Komisija u razumnom roku sažetke dostavlja Sekretarijatu ICCAT-a. | The Commission shall forward the summaries to the ICCAT Secretariat within a reasonable period of time. | Within a reasonable time of time, the Commission submits the summary to the ICCAT Secretariat. | The Commission shall transmit the summaries to the ICCAT Secretariat within a reasonable period of time. |

| Мисля, че трябва да бъдем внимателни, за да облекчим производителите, дистрибуторите и търговците на дребно. | I think we have to be careful to ease the burden for producers, distributors and retailers. | I think we need to be careful to ease manufacturers, distributors and retailers. | I think we need to be careful to make it easier for manufacturers, distributors and retailers. |

Microsoft has developed a new class of AI Mixture of Experts models called Z-code that are boosting accuracy in Translator, an Azure Cognitive Service, as shown in these before-and-after examples.

That’s because Z-code runs most efficiently when it has batches of sentences to translate at once, and document translation lends itself well to that task, said Vishal Chowdhary, partner development manager of Translator who led efforts to turn a research model into something that could be deployed in real-world production scenarios and made available to customers.

Previously, Translator needed 20 separate models to translate between 10 languages: one for English to French, French to English, English to Macedonian, Macedonian to English and so on.

Now, one single Z-code production model can translate all 10 languages to and from English, eliminating the need for multiple systems. Larger research Z-code models have been able to translate directly between 101 languages in 10,000 directions, without having to go through English first.

Companies with international operations in multiple markets often need a way to improve communication across departments with employees that speak many different languages. Today’s machine learning models need huge translation data sets with dialects for training, and there may not be enough data for all the desired languages and dialects, particularly in smaller markets.

The ability to share knowledge across different languages enables Z-code to produce more accurate results for underrepresented languages that don’t have a huge number of translation examples to learn from. This will help improve AI fairness and ensure that high-quality translations are not restricted to languages with rich training resources only, Huang said.

“The 107 languages we currently support might cover what’s spoken at the Olympics or the United Nations,” Huang said. “But there are 7,000 languages spoken around the world and there are a lot of small communities we aren’t able to support yet. We want to be fully multilingual with our AI because our goal is to serve every citizen on the planet.”

Related:

- Learn more: Project Z-code

- Read more: Microsoft Translator enhanced with Z-code Mixture of Experts models

- Read more: A holistic representation toward Integrative AI

- Read more: DeepSpeed powers 8x larger Mixture of Experts model training with high performance

- Read more: Scalable and efficient Mixture of Experts training for multitask multilingual models

- Learn more: Microsoft Translator

- Learn more: Azure Cognitive Services

- Read more: Getting People Talking: Microsoft Improves AI Quality and Efficiency in Translator Using NVIDIA Triton

Top image: Translator Partner Development Manager Vishal Chowdhary (left) and Principal Researcher Hany Hassan Awadalla (right) helped lead the Microsoft team that is now using Z-code Mixture of Experts AI models to power machine translation improvements in Translator. Photo by Dan DeLong for Microsoft.

Jennifer Langston writes about Microsoft research and innovation. Follow her on Twitter.