The Monthly Tech-In: Your source of “byte-sized” updates on Microsoft innovations and global tech

More from AI

Microsoft’s advancements in AI are grounded in our company’s mission to help every person and organization on the planet to achieve more — from helping people be more productive to helping solve society's most pressing problems.

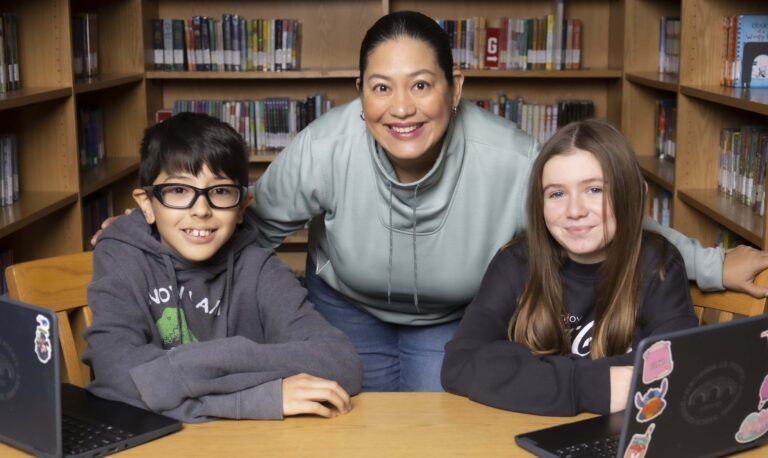

View allMore from Work & Life

Learn how we’re helping people stay connected, engaged and productive — at work, at school, at home and at play.

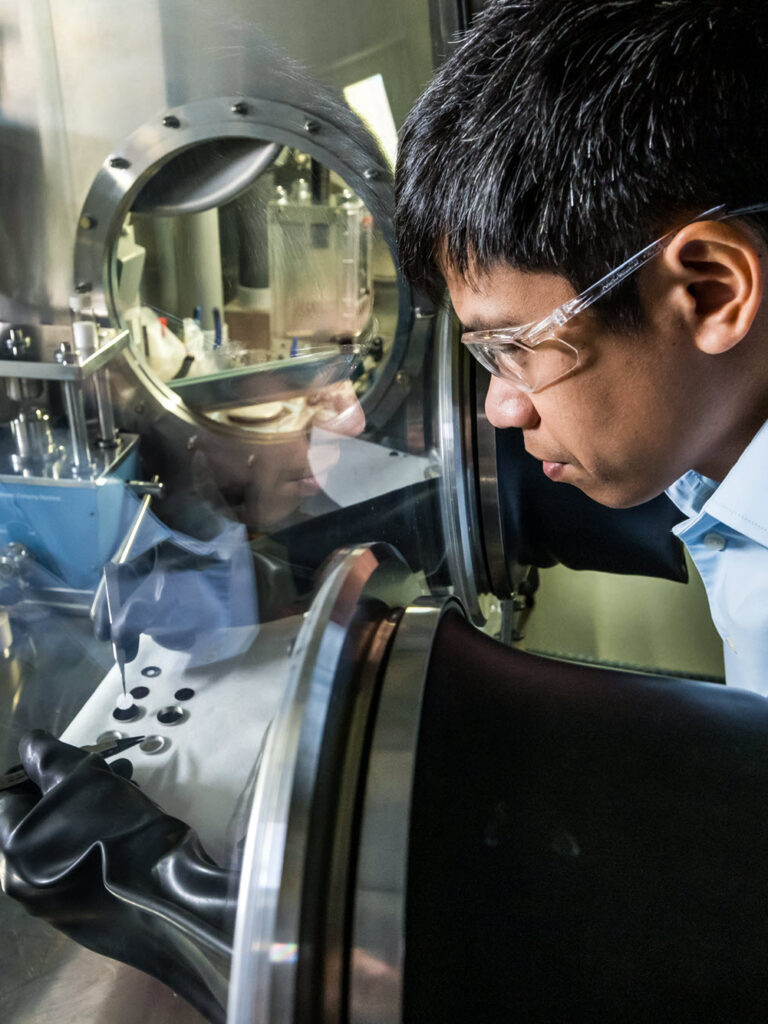

View allMore from Digital Transformation

Microsoft customers and partners are using technology in innovative, disruptive and transformative ways. These are their stories.

View allMore from Sustainability

Microsoft is accelerating progress toward a more sustainable future by working to reduce our environmental footprint, helping our customers build sustainable solutions and advocating for policies that benefit the environment.

View all