AI technology helps students who are deaf learn

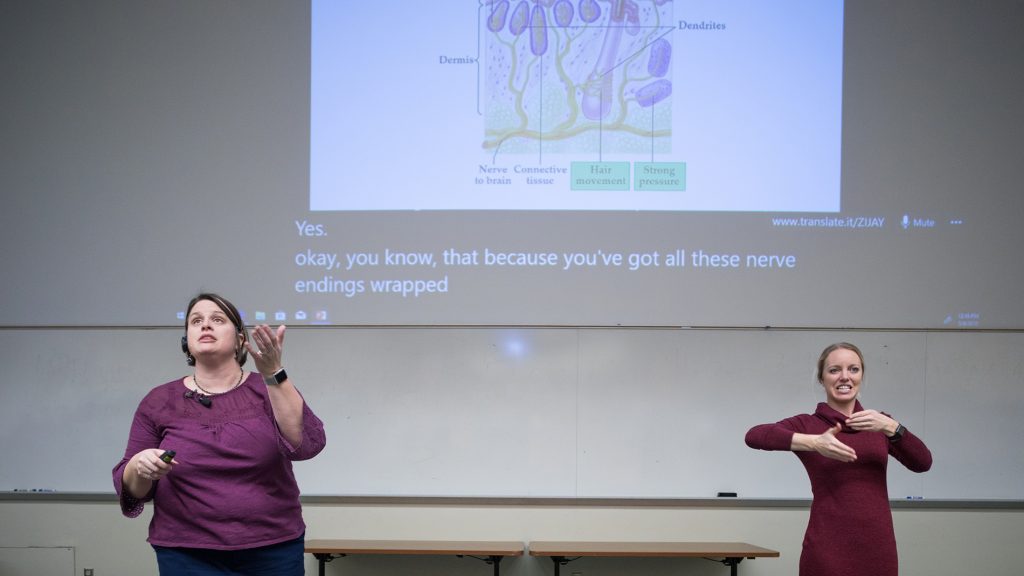

ROCHESTER, NY — As stragglers settle into their seats for general biology class, real-time captions of the professor’s banter about general and special senses – “Which receptor picks up pain? All of them.” – scroll across the bottom of a PowerPoint presentation displayed on wall-to-wall screens behind her. An interpreter stands a few feet away and interprets the professor’s spoken words into American Sign Language, the primary language used by the deaf in the US.

Except for the real-time captions on the screens in front of the room, this is a typical class at the Rochester Institute of Technology in upstate New York. About 1,500 students who are deaf and hard of hearing are an integral part of campus life at the sprawling university, which has 15,000 undergraduates. Nearly 700 of the students who are deaf and hard of hearing take courses with students who are hearing, including several dozen in Sandra Connelly’s general biology class of 250 students.

The captions on the screens behind Connelly, who wears a headset, are generated by Microsoft Translator, an AI-powered communication technology. The system uses an advanced form of automatic speech recognition to convert raw spoken language – ums, stutters and all – into fluent, punctuated text. The removal of disfluencies and addition of punctuation leads to higher-quality translations into the more than 60 languages that the translator technology supports. The community of people who are deaf and hard of hearing recognized this cleaned-up and punctuated text as an ideal tool to access spoken language in addition to ASL.

Microsoft is partnering with RIT’s National Technical Institute for the Deaf, one of the university’s nine colleges, to pilot the use of Microsoft’s AI-powered speech and language technology to support students in the classroom who are deaf or hard of hearing.

“The first time I saw it running, I was so excited; I thought, ‘Wow, I can get information at the same time as my hearing peers,’” said Joseph Adjei, a first-year student from Ghana who lost his hearing seven years ago. When he arrived at RIT, he struggled with ASL. The real-time captions displayed on the screens behind Connelly in biology class, he said, allowed him to keep up with the class and learn to spell the scientific terms correctly.

Now in the second semester of general biology, Adjei, who is continuing to learn ASL, takes a seat in the front of the class and regularly shifts his gaze between the interpreter, the captions on the screen and the transcripts on his mobile phone, which he props up on the desk. The combination, he explained, keeps him engaged with the lecture. When he doesn’t understand the ASL, he references the captions, which provide another source of information and the content he missed from the ASL interpreter.

The captions, he noted, occasionally miss crucial points for a biology class, such as the difference between “I” and “eye.” “But it is so much better than not having anything at all.” In fact, Adjei uses the Microsoft Translator app on his mobile phone to help communicate with peers who are hearing outside of class.

“Sometimes when we have conversations they speak too fast and I can’t lip read them. So, I just grab the phone and we do it that way so that I can get what is going on,” he said.

AI for captioning

Jenny Lay-Flurrie, Microsoft’s chief accessibility officer, who is deaf herself, said the pilot project with RIT shows the potential of AI to empower people with disabilities, especially those with deafness. The captions provided by Microsoft Translator provide another layer of communication that, in addition to sign language, could help people including herself achieve more, she noted.

The project is in the early stages of rollout to classrooms. Connelly’s general biology class is one of 10 equipped for the AI-powered real-time captioning service, which is an add-in to Microsoft PowerPoint called Presentation Translator. Students can use the Microsoft Translator app running on their laptop, phone or tablet to receive the captions in real time in the language of their choice.

“Language is the driving force of human evolution. It enhances collaboration, it enhances communication, it enhances learning. By having the subtitles in the RIT classroom, we are helping everyone learn better, to communicate better,” said Xuedong Huang, a technical fellow and head of the speech and language group for Microsoft AI and Research.

Huang started working on automatic speech recognition in the 1980s to help the 1.3 billion people in his native China avoid typing Chinese on keyboards designed for Western languages. The introduction of deep learning for speech recognition a few years ago, he noted, gave the speech technology human-like accuracy, leading to a machine translation system that translates sentences of news articles from Chinese to English and “the confidence to introduce the technology for every-day use by everyone.”

Growing demand for access services

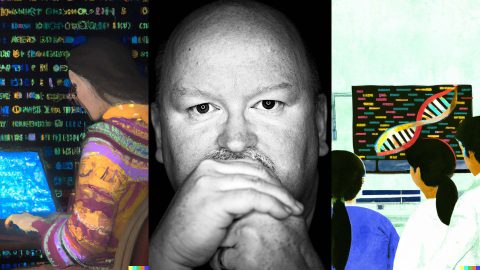

When Gary Behm enrolled in 1974, he was one of about 30 students who are deaf and hard of hearing registered for classes at RIT. ASL interpreters interpreted his professors’ spoken words into sign language, as interpreters do in classes across campus today. He graduated with a degree in electrical engineering and pursued a successful career at IBM. He moved around the country, picked up a master’s degree in mechanical engineering and raised a family of three sons, two of them who are deaf, with his wife, who is also deaf.

Once the children were grown and pursuing careers of their own, he and his wife, who he met at NTID, found their way back to the college. Behm, a computer savvy mechanical engineer, started working on access technologies to support NTID’s growing student body, which now includes more than 1,500 students, nearly half of them registered for classes in RIT’s eight other colleges.

“We are very excited about that growth, but we are constrained by the access services that we can provide to those students,” said Behm, who is now the interim associate vice president of academic affairs at NTID and director of the Center on Access Technology, the area charged with research and deployment of emerging access technologies.

A combination of access services such as interpreters and real-time captioning helps students who are deaf and hard of hearing overcome engagement obstacles in the classrooms to keep up with lectures. Students who are hearing, Behm explained, routinely split their attention in the classroom. If the professor writes an equation on the board while talking, for example, students who are hearing can listen and copy the equation into their notebooks simultaneously.

“For a deaf person, that is impossible. My engagement is linked to the interpreter,” said Behm. “But when a professor says something like, ‘See this equation on the board,’ I have to break my engagement with the interpreter and try to see which equation they are talking about, look at it, understand it.”

“By the time I get back to catch the information that is being transmitted by the interpreter, it is gone.”

To help address the engagement problem, the university employs a full-time staff of about 140 interpreters, which are critical for communication, and more than 50 captionists. The captionists use a university-developed technology called C-Print to provide real-time transcriptions of lectures that are displayed on the laptops and tablets of students who are deaf and hard of hearing. In addition, a fleet of students take shareable notes so that the students who are deaf and hard of hearing can focus on the interpreters and captions during class.

“The question now becomes can we continue to increase our access services?” said Behm.

As more students who are deaf and hard of hearing enroll in RIT degree programs dispersed across the university’s colleges, RIT and NTID remain committed to helping their students fully engage in campus life. RIT already employs the largest staff of interpreters and captioning professionals of any educational institution in the world and yet demand for access services continues to grow. That’s why Behm started looking for other viable solutions, including automatic speech recognition, known as ASR.

Automatic speech recognition

The center’s preliminary experimentation with ASR in the spring of 2016 fell short of expectations, according to Brian Trager, an NTID alum and now CAT associate director. The system that the center’s researchers first tested was inaccurate to the point that they failed to understand what people were saying, especially when discussing scientific and technical terms.

“I became a head nodder again,” said Trager, who is deaf and spent his childhood struggling to read lips. He often nodded his head in agreement even when clueless about the conversation.

“Not only that, the text was difficult to read,” he continued. “For example, there was one teacher who was talking about 9/11 and the system spelled it out ‘n-i-n-e e-l-e-v-e-n’ and the same for years, the same for currency. It is just raw data. My eyes got tired. There weren’t periods or commas. There was no spatial way to understand.”

That summer, an undergraduate working in the CAT lab experimented with the ASR offerings from various technology companies. Microsoft’s looked promising. “Numbers like 9/11 really came up 9 slash 11, as you would imagine, and 2001 came up 2001. It had punctuation. And that alone was great because the readability factor really improved. That’s a huge difference. It was something that was much more comfortable and easy to access,” said Trager.

The NTID’s CAT researchers then learned about a beta version of a Microsoft Cognitive Service called the Custom Speech Service that enhances automatic speech recognition by allowing developers to build custom language models for domain specific vocabulary. The researchers inquired about joining the beta. Less than 24 hours later, they received an email from Will Lewis, a principal technical program manager for machine translation in Microsoft’s research organization.

Language models for the classroom

Lewis and his team at Microsoft introduced CAT researchers to Microsoft Translator, and by the fall of 2017 the teams were collaborating on the build of custom language models specific to course material and piloting the technology in classrooms with the Presentation Translator add on for PowerPoint.

To build the models, the researchers mined the university’s database of transcriptions from more than a decade of C-Print captions of specific professors’ lectures, as well as notes that professors type into their PowerPoint presentations. The AI in the Custom Speech Service uses this data to build models for how domain-specific words are pronounced. When a speaker then uses the words, the system recognizes them and displays the text in the real-time transcript.

Chris Campbell is an NTID alum who is now a research associate professor in the CAT, where he leads the center’s ASR deployment efforts. In the fall of 2017, he taught a programming fundamentals course to NTID students. He teaches using American Sign Language.

“Sometimes, we have students who come to NTID who are not fluent in sign language; they depend on English. So, for my class I requested to try ASR to see how it would go using an interpreter,” he said.

The interpreter wore a headset and spoke into the microphone everything Campbell signed. Microsoft Presentation Translator displayed the captions below his PowerPoint slides and on his students’ personal devices running the Microsoft Translator app. As Campbell signed, he said, he watched his students’ eyes bounce from him, to the captions, to the interpreter. The amount of time they spent on any one information source, he noted, depended on the student’s comfort with ASL and level of hearing.

“I was able to listen to the interpreter and read the captions on my laptop,” said Amanda Bui, a student who is hard of hearing in the class who is not fluent in ASL and had lacked any access services while growing up in Fremont, California. “It was easier for me to learn the coding languages.”

Accessibility for all

Connelly, the general biology professor, sees the automatic captioning technology as augmenting, not replacing, the work of ASL interpreters. That’s because ASL, which can convey several words in a single gesture, is less taxing than reading. But when used in combination with interpreters, the technology increases access for a wider range of students in the classroom, especially those who are less proficient in ASL such as Joseph Adjei, her student from Ghana.

What’s more, she noted, Microsoft Translator allows students to save the transcripts, which has transformed how her entire class relates to the course material.

“They know every goofy word I said today,” she said. “Lecture is no longer one and done. It is one and done of me standing in front of them, but they have me on paper, they have me in text form. It has really changed when they come to my office. They don’t come with ‘I missed this word’ or ‘I’ve missed this definition.’ They come with ‘I don’t know why this applies to this.’ It has changed the focus for us.”

Students who are hearing regularly check the captions in class to pick up material they missed and save the transcripts as study aids, Connelly added. When the one student who is deaf in her evolutionary biology class who was piloting the ASR system during the fall semester dropped the course, Connelly turned off the captions. The hearing students revolted. Presentation Translator ran for the entire semester.

Jenny Lay-Flurrie said she loves those types of stories because they reinforce the value of investing in accessibility.

“From a pure product engineering design perspective,” she said, “if you design for accessibility, you design for all, including the 1 billion plus with disabilities.”

Special thanks to Cynthia Collward, senior interpreter at RIT, for providing interpretation services.

Top image: Sandra Connelly teaches a general biology class at RIT while Andrea Whittemore interprets her words into American Sign Language. Microsoft Presentation Translator provides real-time captions on the screen behind her. Photo by John Brecher.

Related:

- Learn more about Microsoft Translator for education and Presentation Translator

- Learn more about the National Technical Institute for the Deaf at RIT

- Read: Historic achievement: Microsoft researchers reach human parity in conversational speech recognition

- Read: Microsoft Translator erodes language barrier for in-person conversations